17 Sep 2025

AI4DH at 3rd Multimodal AI Workshop

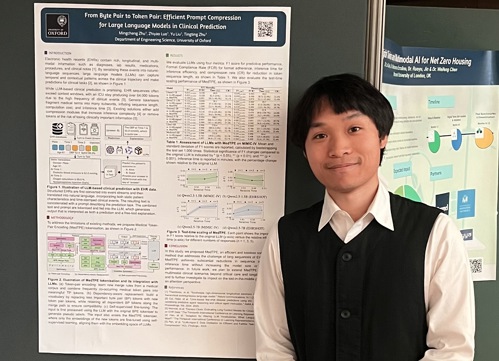

On 17 September 2025, members of the AI4DH Lab took part in the 3rd Multimodal AI Workshop, held at the Barbican Centre in London. Munib Mešinović, Chenqi Li, and Mingcheng Zhu each presented their work, showcasing the lab’s diverse contributions to the field of multimodal artificial intelligence.

Munib delivered an oral presentation on his framework for survival analysis using multimodal electronic health records (EHR). His work integrates diverse patient data within the EHR database into a unified model to improve risk prediction and provide interpretable results for multiple clinical outcomes.

Chenqi contributed in several formats. He gave a pitch on his research into multi-teacher distillation for foundation models, showing how knowledge from multiple large models can be combined to improve performance across different modalities. He also presented a poster on bridging foundation models for cross-modality prediction, addressing the challenge of enabling one model trained on a specific modality to effectively work with another. Beyond research, Chenqi’s creative flair was recognised as he won the Best AI Photo Competition with an image generated by Nano Banana, highlighting the imaginative side of multimodal AI.

Mingcheng presented a poster on multimodal EHR event streams, tackling the challenge of handling very long sequences of clinical events. His proposed method compresses these event streams, allowing large language models (LLMs) to process them more efficiently while retaining critical context for accurate prediction. His contribution was acknowledged with the Best Student Poster Award.

The workshop was an excellent opportunity for AI4DH to showcase its research in multimodal AI, exchange ideas with the broader community, and celebrate achievements in both technical innovation and creativity.