Explainable and Trustworthy AI for Digital Health

Explainable and Trustworthy AI for Digital Health

We focus on interpretability, fairness, and reliability to build AI tools that clinicians and patients can trust. Our work includes explainable modelling, uncertainty quantification, and ethical AI design for safe deployment in real-world healthcare.

Publications

- Mesinovic, Munib, et al. "DynaGraph: interpretable dynamic graph learning for temporal electronic health records." npj Digital Medicine (2026). paper, code

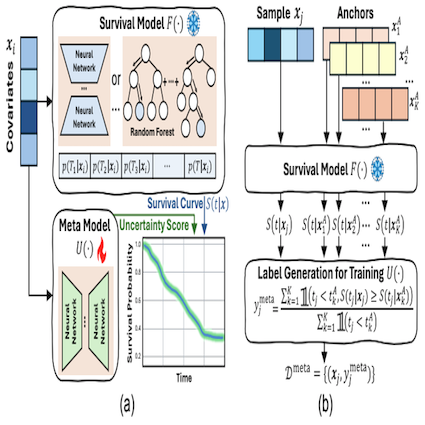

- Liu, Yu, et al. "SurvUnc: A Meta-Model Based Uncertainty Quantification Framework for Survival Analysis." Proceedings of the 31th ACM SIGKDD Conference on Knowledge Discovery and Data Mining. 2025. paper, code

- Chen, Zhikang, et al. "Think Consistently, Reason Efficiently: Energy-Based Calibration for Implicit Chain-of-Thought." arXiv preprint arXiv:2511.07124 (2025). paper

- Mesinovic, Munib, Max Buhlan, and Tingting Zhu. "Causal Graph Neural Networks for Healthcare." arXiv preprint arXiv:2511.02531 (2025). paper

- Mesinovic, Munib, Peter Watkinson, and Tingting Zhu. "Explainability in the age of large language models for healthcare." Communications Engineering 4.1 (2025): 128. paper