Data Centre Flexibility for Power Systems

Data Centre Flexibility for Power Systems

Background and Challenges

The recent surge of artificial intelligence (AI), especially large language models (LLMs), has led to a rapid development of data centres dedicated to AI development. These AI-focused data centres intensively rely on GPUs, and can be more energy-demanding than those traditional general-purpose CPU-intensive data centres due to the heavy use of energy-demanding GPUs. For example, a latest-generation “192-thread AMD EPYC 9654 CPU” has a rated power of only 360 W, while the latest-generation “NVIDIA B200 GPU” can draw 1000 W alone. The other contributor is the rapidly expanding use of LLMs, which requires intensive computing. Estimated by Electric Power Research Institute (EPRI) (link: https://www.epri.com/research/products/3002028905), data centres now consume 4% of the total power generation annually in the US, and this proportion can increase to as much as 9.1% by 2030, with AI being one of the main drivers. The increased energy demand for AI poses a major challenge for power grid infrastructure, especially when considered alongside the ongoing efforts to electrify heating and transportation to achieve net-zero carbon emissions.

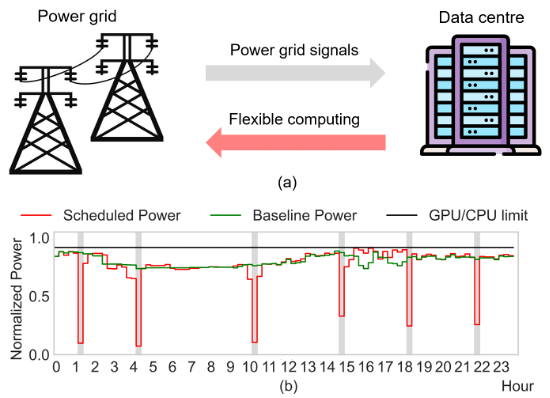

To mitigate the stress on power systems, data centres can provide substantial grid flexibility from an operational perspective. For example, computational jobs in data centres can be strategically scheduled to times with sufficient renewable generation, or locations with low grid congestion risks. Also, both CPU and GPU support hardware-level dynamic power control, by which the power consumption can be adjusted within 1 second.

However, challenges persist in data centre flexibility across various dimensions. First, there is insufficient motivation for data centre operators to offer grid flexibility, as providing flexibility can reduce computing performance—one of the primary objectives of a data centre. Accurate cost modelling of data centre flexibility becomes important to provide a clear picture on financial incentives. Second, the real-time operation of “data centre grid flexibility” is computationally challenging, considering the numerous computing jobs inside data centres with varying characteristics and requirements. Additionally, uncertainty in data centre operations—such as the unpredictable arrival of future jobs—adds another layer of complexity.

Principal Investigator

Doctoral Students

Yihong Zhou

DPhil Student

Recent Publications

- Yihong Zhou, Angel Paredes, Chaimaa Essayeh, and Thomas Morstyn. "AI-focused HPC Data Centers Can Provide More Power Grid Flexibility and at Lower Cost." arXiv preprint arXiv:2410.17435 (2024). https://arxiv.org/abs/2410.17435

- Ángel Paredes, Yihong Zhou, Chaimaa Essayeh, José A. Aguado, and Thomas Morstyn. "Exploiting Data Centres and Local Energy Communities Synergies for Market Participation." In 2024 IEEE PES Innovative Smart Grid Technologies Europe (ISGT EUROPE), pp. 1-5. IEEE, 2024. https://ieeexplore.ieee.org/abstract/document/10863347

- Yihong Zhou, Ángel Paredes, Chaimaa Essayeh, and Thomas Morstyn. "Evaluating and Comparing the Potentials in Primary Response for GPU and CPU Data Centers." In 2024 IEEE Power & Energy Society General Meeting (PESGM), pp. 1-5. IEEE, 2024. https://ieeexplore.ieee.org/abstract/document/1068906