Deep learning has achieved many breakthroughs across science and engineering disciplines, ranging from autonomous driving to protein structure prediction. The impact it has on quantum technologies is no exception.

Classical machine learning for quantum computing is a central focus of Professor Natalia Ares’ research at the University of Oxford. Her group develops intelligent and scalable algorithms to address core challenges in operating quantum computers, including optimisation, control, and prediction of quantum device properties.

The work centres on electrostatically defined semiconductor quantum dot devices, which confine individual charges and enable precise spin control. Recently, the group has demonstrated fully autonomous tuning of spin qubits 1 [1, 2]. Many of their machine learning methods can also extend naturally to other quantum computing platforms. This post highlights three novel achievements of the Ares group: optimizing quantum device layouts with neural operators, extracting feature graphs from experimental data with transformers and end-to-end learning [3], and efficient meta-learning of quantum dynamics in data-scarce regimes [4]. While deep learning algorithms are usually executed on modern GPUs, the same hardware can also greatly accelerate the generation of training data. For meta-learning quantum dynamics, for instance, CUDA-Q on NVIDIA GPUs reduces simulation times by more than two orders of magnitude.

Device optimization

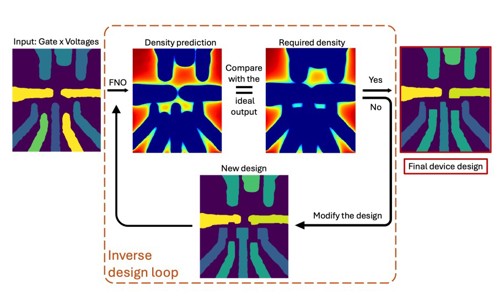

Designing the gate electrode layout for electrostatically defined quantum dot devices is challenging because both the nanoscale geometry and the applied voltages shape the confinement potential, which in turn determines the charge densities and ultimately the qubit properties. While conventional physics solvers can accurately model these charge distributions, they are computationally expensive and thus impractical for exploring large design spaces. In addition, their outputs are not readily compatible with automated design pipelines, limiting their effectiveness for systematic device optimisation.

To address this challenge, the Ares group working in collaboration with NVIDIA, employs Fourier Neural Operators (FNOs), a class of machine learning models trained on data generated by conventional solvers. FNOs learn the mapping from gate geometries and voltages to the resulting confinement potentials and charge densities. Once trained, they can predict these quantities in milliseconds, delivering orders-of-magnitude speed-up over traditional simulations.

This approach enables inverse design: instead of merely predicting the charge density resulting from a given device configuration, we can specify a target density and allow the model to infer suitable gate layouts and voltage settings. Although the optimisation loop is still under development, early results indicate that combining FNOs with differentiable gate modifications provides a promising route towards automated and scalable device design.

By combining conventional physics simulations with modern machine learning, this approach lays the foundation for tools that make quantum dot device design faster, capable of handling more complex architectures, and more amenable to automation, an important step toward scaling up quantum processors.

Transformers for spin qubit tuning

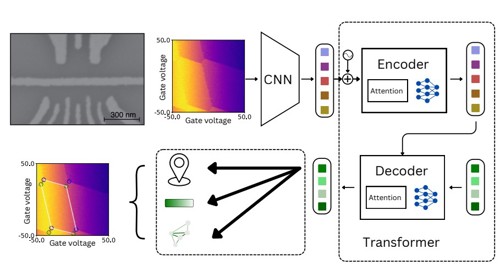

Turning quantum dot devices into controllable qubits is a highly complex tuning task. As quantum processors scale, this tuning becomes a critical bottleneck that necessitates automated routines. Recent work by the Ares group demonstrates a deep-learning approach that substantially reduces this bottleneck [3].

The TRACS algorithm (Transformers for Analyzing Charge Stability Diagrams) leverages modern computer vision techniques, using a neural network architecture akin to those powering large language models such as ChatGPT. Charge stability diagrams, which map the electronic configuration of a quantum dot device as gate voltages are varied, are central to identifying suitable configurations for qubit operation. TRACS automatically analyses these diagrams and extracts the exact graph structure of operating points, a capability that is essential for device characterization and qubit operation.

To train TRACS for this complex task, the authors generated a large, realistic dataset using the GPU-accelerated simulator QArray [5]. This computationally intensive process, along with TRACS training, was parallelised across eight NVIDIA RTX 4000 Ada Generation GPUs. The resulting model achieves more than an order-of-magnitude speed-up over previous methods and generalises across different quantum dot device architectures without retraining. This capability is expected to become a core component of next-generation control systems, dramatically accelerating the development and deployment of large-scale quantum processors.

Meta-learning quantum dynamics

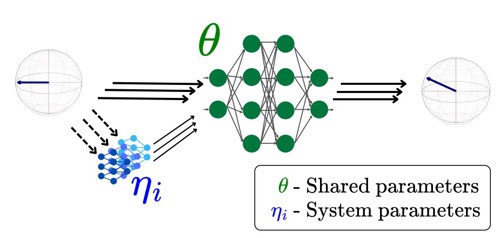

Meta-learning, or “learning to learn,” is a technique in machine learning to account for task variations. Rather than training and testing one neural network on the same task, in meta-learning, a network is trained to perform a set of related but distinct tasks. This enables rapid adaptation to new tasks with limited data, a property of particular importance in quantum technologies where data acquisition is costly and devices exhibit substantial variability.

Applied to quantum dynamics, meta-learning makes it possible to model an entire class of systems—such as Hamiltonians with varying parameters—rather than training a separate model for each case. The Ares group implements this by splitting the neural network parameters into two sets: shared parameters, which are common across all systems and capture the general structure of the Hamiltonian, and system parameters, which specify a particular instance of the Hamiltonian [4]. Both sets are optimised during training using a bi-level procedure. For a new system, however, only the system parameters need to be updated, enabling accurate modelling from very limited data.

Crucially, this framework also extends to non-Hamiltonian dynamics. The authors demonstrate that it can predict qubit characteristics in quantum dot systems from limited data. This approach has the potential to significantly accelerate the identification of optimal control parameters across different device architectures, ultimately overcoming limitations posed by device variability.

Accelerated meta-learning

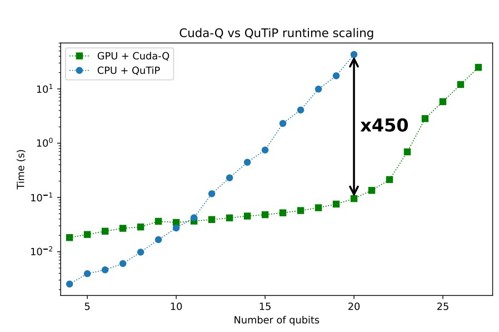

The training datasets for meta-learning quantum dynamics can be generated using CUDA-Q. Results from the Ares group show a substantial speedup in simulating a Heisenberg spin chain with CUDA-Q on an NVIDIA GPU com5 pared to QuTiP on a CPU (Figure 4). The CUDA-Q simulation uses the state vector backend nvidia in FP32 with Trotterization and is run on a single RTX 4090 GPU. The QuTiP simulation runs on a AMD Ryzen Threadripper PRO 5955WX with 16 cores with the dynamics solver mesolve. CUDA-Q not only delivers speedups of more than two orders of magnitude but also enables the simulation of larger quantum systems. Leveraging the mgpu option for multi-GPU computing and more powerful hardware such as NVIDIA H100 Tensor Core GPUs is expected to yield even greater performance gains.

Outlook

Deep learning is accelerating progress in quantum computing, as illustrated here through applications in device optimisation, transformer-based experimental data analysis, and meta-learning of quantum dynamics. NVIDIA GPUs play a central role, not only in training these models, but also in generating the large-scale training datasets required through physics-based simulation. For quantum dynamics, the CUDA-Q platform provides unprecedented computational speed.

Looking ahead, future research by the Ares group will focus on efficient design of complex quantum devices, integrating TRACS into online control routines, and pairing meta-learning predictions with optimisers, paving the way for more autonomous and scalable quantum device operation.

Case study reproduced by kind permission of NVIDIA.

References

[1] Jonas Schuff, Miguel J. Carballido, Madeleine Kotzagiannidis, Juan Carlos Calvo, Marco Caselli, Jacob Rawling, David L. Craig, Barnaby van Straaten, Brandon Severin, Federico Fedele, Simon Svab, Pierre Chevalier Kwon, Rafael S. Eggli, Taras Patlatiuk, Nathan Korda, Dominik Zumb¨uhl, and Natalia Ares. Fully autonomous tuning of a spin qubit, 2024.

[2] Cornelius Carlsson, Jaime Saez-Mollejo, Federico Fedele, Stefano Calcaterra, Daniel Chrastina, Giovanni Isella, Georgios Katsaros, and Natalia Ares. Automated all-rf tuning for spin qubit readout and control, 2025.

[3] Rahul Marchand, Lucas Schorling, Cornelius Carlsson, Jonas Schuff, Barnaby van Straaten, Taylor L. Patti, Federico Fedele, Joshua Ziegler, Parth Girdhar, Pranav Vaidhyanathan, and Natalia Ares. End-to-end analysis of charge stability diagrams with transformers, 2025.

[4] Lucas Schorling, Pranav Vaidhyanathan, Jonas Schuff, Miguel J. Carballido, Dominik Zumb¨uhl, Gerard Milburn, Florian Marquardt, Jakob Foerster, Michael A. Osborne, and Natalia Ares. Meta-learning characteristics and dynamics of quantum systems, 2025.

[5] Barnaby van Straaten, Joseph Hickie, Lucas Schorling, Jonas Schuff, Federico Fedele, and Natalia Ares. QArray: A GPU-accelerated constant capacitance model simulator for large quantum dot arrays. SciPost Phys. Codebases, page 35, 2024.

Heat Pumps cut energy use but increase peak demand

Energy