Scientists at the Oxford Robotics Institute describe their research in teaching robots to better teach themselves, reducing the need for human intervention.

Learning from Demonstration with Minimal Human Effort

Learning from demonstration and reinforcement learning have been applied to many difficult problems in sequential decision making and control. In most settings, it is assumed that the demonstrations available are fixed. In this work, we consider learning from demonstration in the context of shared autonomy.

In shared autonomy systems, control may switch back and forth between autonomous control, and a human teleoperator. Human input may be utilised to improve system capabilities or help recover from failures. Examples include autonomous driving, in which a teleoperator may be called to deal with unusual situations, or robots for disaster response which have limited autonomous capabilities.

We wish to minimise the burden on the human teleoperator. As such, human assistance should only be requested when necessary. Additionally, we wish to use the demonstrations and other experience gathered to improve autonomous performance, further reducing dependence on the human. The problem which arises is the central focus of this work:

How do we decide when autonomous performance is good enough, or when it is better to ask for a demonstration?

Problem Definition

We assume that the robot must perform episodic tasks. There is a cost for requesting a human demonstration which represents the human effort associated with performing the demonstration. Additionally, there is a cost for failure which represents the human effort required to recover the robot from failure. For example, moving a mobile robot which is stuck or recovering dropped objects in a manipulation task.

The robot may have an arbitrary number of controllers available including the human teleoperator, learnt controllers, and pre-programmed controllers (which might also be useful for reducing the human effort required).

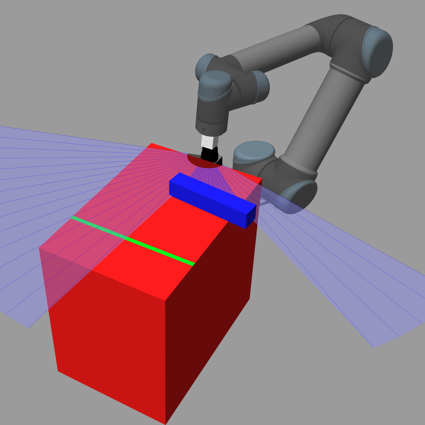

At the beginning of each episode, the robot encounters a random initial state. Based on the situation, the robot must decide which controller to choose to minimise the cumulative human cost of completing the task over many episodes. Possible initial states in our experimental domains are illustrated below.

Possible starting points in our experimental domains

Our Approach

Given the initial state, we estimate the probability of success for each controller, and the uncertainty in this estimate from prior experience. The continuous correlated beta process is used to compute these estimates [1]. One controller is learnt using deep reinforcement learning with demonstrations from the other controllers [2]. To deal with the non-stationary performance of the learnt controller, the learnt controller performance is estimated using only recent data.

To make the final controller selection, we consider the problem as a contextual multi-armed bandit and apply an upper-confidence bound algorithm [3]. Based on the initial state (the context) we choose the controller with the best optimistic cost estimate.

The video below demonstrates our approach when the learnt controller can perform some of the tasks. In cases where autonomous performance is estimated to be good, the autonomous controller is chosen. In cases where the probability of success is estimated to be low, a human demonstration is requested.

video 1

Training of the robot. As the learnt controller improves, the system rarely requests human assistance.

Key Outcomes

Our experimental results in three domains illustrated several key outcomes from this research:

- Our approach reduces the cumulative human cost relative to other common approaches such as giving all the demonstrations at the beginning. Intuitively, this is because our approach avoids unnecessary demonstrations, and reduces the number of failures by requesting assistance.

- Including a sub-optimal pre-programmed baseline controller may further reduce the human cost. This is because our approach can identify and selectively choose the situations in which such a controller performs well.

- Modifying the cost values assigned to demonstrations and failures changes the behavior of the system. If the cost for failure is high, the controller selection will conservatively rely on more demonstrations, and vice versa.

- With a fixed budget of demonstrations, our approach results in a better policy. Intuitively, this is because our approach asks for more informative demonstrations.

Please check out the video summary below. For more details please refer to the paper.

For any questions contact Marc Rigter (mrigter@robots.ox.ac.uk).

References

[1] Goetschalckx, R., Poupart, P., & Hoey, J. (2011, June). Continuous correlated beta processes. In Twenty-Second International Joint Conference on Artificial Intelligence.].

[2] Nair, A., McGrew, B., Andrychowicz, M., Zaremba, W., & Abbeel, P. (2018, May). Overcoming exploration in reinforcement learning with demonstrations. In 2018 IEEE International Conference on Robotics and Automation (ICRA) (pp. 6292-6299). IEEE.

[3] Li, L., Chu, W., Langford, J., & Schapire, R. E. (2010, April). A contextual-bandit approach to personalized news article recommendation. In Proceedings of the 19th international conference on World wide web (pp. 661-670).].

Content reproduced with kind permission from Marc Rigter.

Alumna Profile - Laura Mason, Legal & General

Alumni