04 May 2020

DPhil student gains 2nd place in IEEE ISBI 2020 Best Paper Award

New technique combining eye-tracking data and machine learning is recognised by the IEEE International Symposium on Biomedical Imaging 2020

DPhil student Richard Droste from the Institute of Biomedical Engineering wins Runner Up for Best Paper

Researchers from the Oxford Institute of Biomedical Engineering have won runner up for the best paper award at the IEEE International Symposium on Biomedical Imaging (ISBI) 2020 for a novel method to identify anatomical landmarks in medical images. The winners were selected from over 400 papers that were accepted at the 17th edition of the scientific conference, which was held virtually for the first time due to the COVID-19 pandemic.

The work, led by Professor Alison Noble and presented by first author and DPhil student Richard Droste, tackles the task of automatically identifying anatomical landmarks in medical images. This task is a crucial prerequisite for problems like estimating the weight of a fetus in the mother’s womb in order to detect fetal growth restriction. It is typically addressed by training machine learning models with manual annotations of the anatomical structures that are believed to be important. However, such systems don’t reveal which anatomical structures are informative for experts in practice.

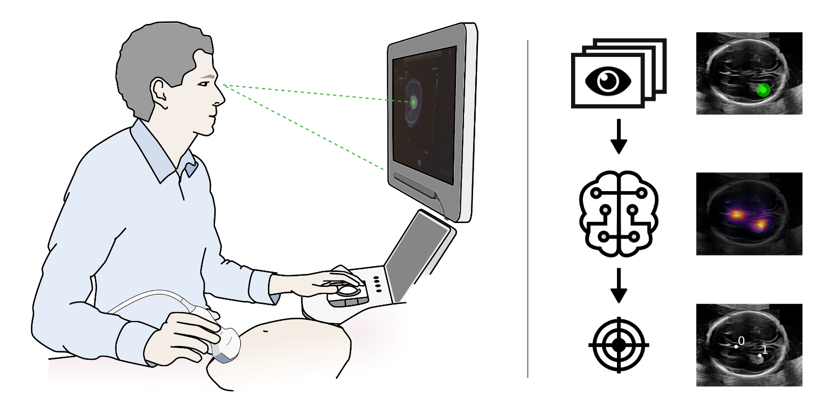

Diagram showing the set up of the eye tracking data collected

Instead of manual annotations, Droste and colleagues train their model with eye tracking data that they acquired from experts during clinical practice. In their paper titled “Discovering Salient Anatomical Landmarks by Predicting Human Gaze”, they show that such a model can be used to identify the anatomical landmarks that operators pay attention to in practice. Moreover, the trained model can automatically detect these salient anatomical landmarks on new images without corresponding gaze-tracking information. The researchers believe that this work has wide-ranging applications such as improved performance for downstream tasks, training inexperienced clinicians, or facilitating automatic landmark detection without manual annotations.

The work is part of Perception Ultrasound by Learning Sonographic Experience (PULSE), an interdisciplinary research project exploring the use of 'artificial intelligence'-based technologies to eliminate the need for highly trained operators for foetal ultrasound screenings. To achieve this goal, large amounts of data are acquired from expert sonographers and models are built that more closely mimic how a human makes decisions from ultrasound images. Clinical expertise is brought to the project by Professor Aris Papageorghiou and Dr. Lior Drukker from the Nuffield Department of Women's and Reproductive Health.

The full list of authors includes Richard Droste, Pierre Chatelain, Harshita Sharma and Alison Noble from the Department of Engineering Science and Lior Drukker and Aris T. Papageorghiou from the Nuffield Department of Women’s & Reproductive Health .

PULSE is an ERC funded grant.