Researchers at the Oxford Robotics Institute are looking into how to map the world in 3D, that can then be used by robots to navigate and explore.

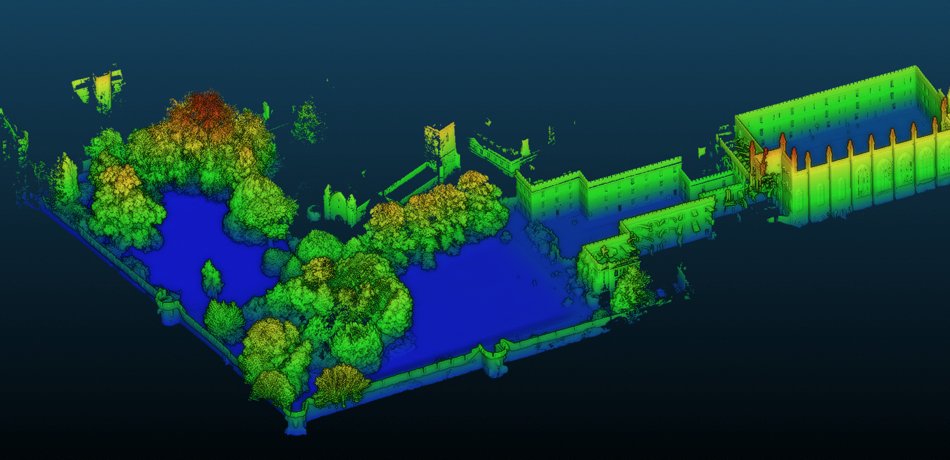

A 3D map of New College's Old Quad and Park coloured according to height

Have you ever wondered how 3D mapping systems such as Google StreetView or autonomous robots see the world? Similar tools developed by robotics researchers have been used to build 3D reconstructions of New College’s medieval quad and the courtyard leading into the garden area with a Mound at its centre using futuristic sensors such as lasers and stereoscopic cameras.

In the last few months robotics researchers from the Oxford Robotics Institute (ORI) have carried out a project 3D mapping the college.

Mapping is carried out at walking speed

Mapping an area in 3D space can be used to give robots information for moving around it. Dr. Milad Ramezani, a post-doctoral researcher at the ORI, has recently led a paper on this area that will appear at the International Conference on Intelligent Robots and Systems (IROS) in November 2020. Dr Ramezani tells us, “Robust simultaneous localisation and mapping (SLAM) is a common problem in robotics research, solving it gives a robot knowledge about where it is located and what exists in its surroundings. Many SLAM systems have been developed in academia, and some have been commercialised. However, they have some limitations from either a hardware perspective or an algorithmic perspective."

"What we would like to do is to develop a SLAM system which can be mounted on robots of any kind – wheeled, flying or walking robots (the latter being my group’s speciality)."

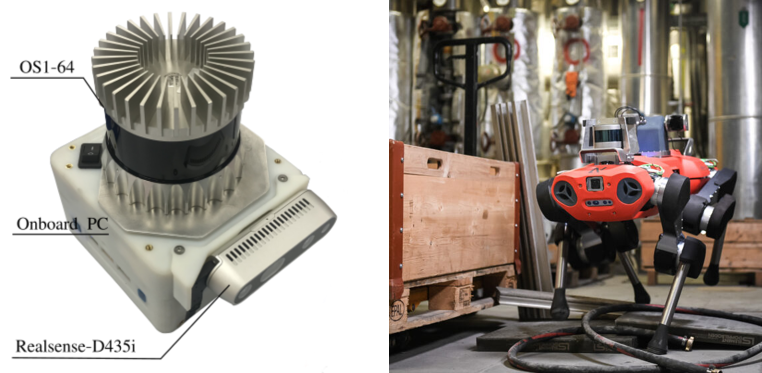

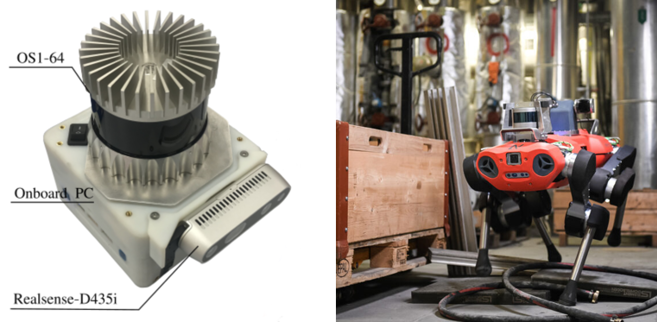

"That is why we developed a handheld device which consists of a 3D LiDAR sensor and a stereoscopic camera, each with a built-in Inertial Measurement Unit (IMU). Also, our handheld device includes an onboard computer on which we can run our software for real-time operations.”

The approach involves a special stereo camera and a laser scanner which is carried through the college at walking pace. The cameras record pairs of images at 30 frames per second, while the laser sensor scans the area 10 times per second. Each scan measures thousands of 3D points where laser beams reflect against the building and walls. The visual and laser measurements are combined using ORI’s mapping software into a unified map as seen in the first figure.

Ramezani explains, “At the outset the approach incrementally estimates the motion of the device by extracting features in each camera image or LiDAR scan – such as corners or lines. These lines are re-detected in subsequent images or scans and are used to triangulate how the device must have moved. By combining many measurements over a short history (about 10 seconds) we create an optimisation problem which estimates both the motion of the device but also a local map of the environment.”

“If you look at New College, you have all kinds of physical structure: such as the regular quad and a courtyard, narrow tunnels and archways while the garden area is of course very different. These different sections can cause different failure modes which the researcher needs to consider. For instance, the tunnel section is quite challenging for the SLAM systems because its constriction makes motion estimation difficult. Another challenging problem in SLAM is recognising when a robot revisits a previously seen area. We use pattern recognition algorithms to compare to a database of prior images to achieve this. This is crucial because it allows us to remove drift from the map and to keep the map globally consistent as the robot (or person in this case) explores the environment.”

Ramezani et al.’s mapping technique is accurate and fast, as Ramezani adds, “Our dataset is unique because we also created a large ‘ground truth’ map using a tripod-mounted laser scanner usually used for quantity surveying. Our system produces a map with similar reconstruction accuracy - meaning that when the maps are overlaid they are close to identical. The major advantage of our work is the speed of data collection. For example, to scan the New College with a tripod scanner, requires a series of static scans which took us 8 hours. Our handheld system collects the map in the time it takes to walk around the college – just 20 mins.”

“Additionally, we have now heavily tested our system in very large environments."

"The hardware we use has different high-tech sensors that are precisely calibrated and synchronised with one another. Our algorithms have sensor redundancy to survive certain failure modes (such as aggressive turning) where other SLAM systems are likely to fail.”

The device combines lasers and stereo cameras which are also used on ORI’s walking robots.

In terms of the future there are many possibilities, Ramezani says, “Our system can have varied applications. For example our DPhil student, Yiduo Wang, is now developing building a reconstruction system upon our SLAM system. Using the ‘point cloud’ map from the SLAM system, his work builds a globally consistent map of the space which is occupied in 3D which can be used to plan a robot’s motions to avoid collisions.”

There are also unexpected ways that this research can be used in conjunction with other research fields, “We have also just started a research collaboration with the Department of Geography. We have recently mapped a small area of Wytham Woods (the University’s experimental forest). Studying attributes such as tree diameter and branch structure could be done automatically by our method - rather than by laborious hand measurements which as in the present method. Geographers use such measurements to estimate forest health and to estimate carbon capture.”

Take a look at the 3D maps and different usages of the dataset. The team hopes to be back at New College soon with their 4 legged robot mapping autonomously.

Thanks to DPhil student Russell Buchanan and New College for the kind permission of part reproduction of his original article on the New College website.

Finding the flow to a smarter, more efficient future

Oxford Spinout